This issue of Root & STEM explores how artificial intelligence (AI) is sparking compelling questions, stories, and lessons surrounding machine learning. This issue of Root & STEM sparks compelling questions, stories, and lessons at the intersections of artificial intelligence (AI), cybersecurity, machine learning, and online safety. Based on research funded by the Ontario Principals Council, we take an in-depth look at the new reality that AI technology is creating and open a discussion around how we can use this technology to create a better—and safer—world.

This issue of Root & STEM was made possible by funding from The Government of Canada, CIRA, and the Office of The Privacy Commissioner of Canada.

Guest Editorial

The Future of Artificial Intelligence

This issue of Root & STEM explores how artificial intelligence (AI) is sparking compelling questions, stories, and lessons surrounding machine learning and online safety. Based on research funded by the Ontario Principals Council on AI, this issue is packed with modern, innovative approaches to online safety, particularly when it comes to teaching and learning. You’ll find articles and illustrations from artists, educators, and AI experts exploring these topics. From the ways AI technology can support online learning to the ethical issues surrounding privacy to the roles and methods AI is reshaping forever, we take an in-depth look at the new reality that AI is creating and open a discussion around how we can use this technology to create a better—and safer—world.

What is artificial intelligence? How is it used?

Artificial intelligence (AI) is a rapidly evolving field. It references the congruence of a number of different technologies. Essentially, it uses predictive modeling and data analysis in order to have machines do things that humans can do—or have been doing until now. Today, we find AI involved in an ever-increasing number of aspects of daily life. For example, it is used in the medical profession to detect cancer by analyzing biomedical data. That’s a predictive way of using AI. Another example is the use of AI in vehicles, where it can optimize the condition of the engine or predict the best driving route.

What are some of the ethical risks that come with AI?

The ethical risks associated with AI are manifold because the predictions it can make are only as good as the learning model it is provided. Therefore, researchers have to make all sorts of judgements when they are creating the models with which AI works. One example of human bias is racial profiling, which involves dangers like the creation of facial recognition models that detect only certain ethnic or racial features based on biased training data. As an associate professor at Ontario Tech University and a practising lawyer who focuses on information privacy law in relation to AI, I am acutely aware of how discrete bits of information can be used to reveal personal, private information.

All design—from rocking chairs to bathtubs, running shoes to rocket ships, sofas to skyscrapers—is inherently biased precisely because the designers have a certain set of users in mind. Now, the questions are: Who are the people involved in design decisions? And with what consequences? There are rules or regulations that attempt to make those decisions more transparent or easily explained to the user. While AI is very powerful, it’s not foolproof. And so, it’s really important to apply human judgement to prevent the various types of discrimination that can occur when bias is not identified and countered. As AI develops, its regulation will take time. So there are two very difficult problems to solve. There are many potential solutions

as well. All of them come with trade-offs. So the answer is to really understand the risk involved and make adjustments as needed. That’s the best we can do.

How can we use AI in a safe and secure way?

There has been enormous progress throughout the world due to AI. It’s a game changer in terms of research and development. However, it’s a tool and, like all tools, it requires judgement and discernment to be used appropriately. Most technological development comes with a certain amount of risk, especially regarding user privacy. There’s no getting away from that. In fact, everything comes with a certain amount of risk. When you get into a car, for example, you accept all sorts of risks, likely without actively thinking about them. The key is not to focus on avoiding risks at all cost, but mitigating them. AI is a powerful and potentially beneficial technology. It should be adopted widely for the benefit it offers. But there’s no reason to have blind faith. Technologies are human artifacts and should be regulated as such. Machine learning technologies are code and pattern detectors and predictors. But at this moment, that’s all. They are not to be used as a substitute for human experience or wisdom. It’s up to us to use what we as humans have—and what AI lacks: human intelligence.

– By Dr. Rajen Akalu

Podcasts

Featured Content

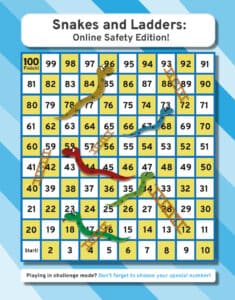

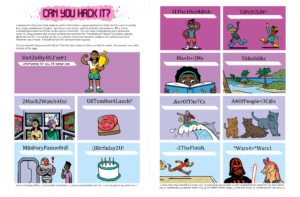

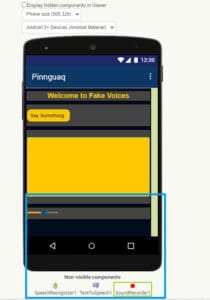

Below is a collection of stories, activities, comics, and lesson plans featured in the eighth issue of Root & STEM.