Overview

Students learn about natural language processing (NLP) and how it works. They also learn to apply the rules of NLP to a text, how computers identify tag words, and how voice-generated technology impacts many aspects of daily life.

Learning Objectives

- Understand natural language processing (NLP)

- Understand the applications of NLP, such as Alexa, Google Home, and Siri, in daily life

- Be aware of educational uses of NLP tools, such as autocorrect and predictive text, grammar checking apps, such as Grammarly, and translation tools, like Google Translate

- Understand some of the ethical implications of the use of NLP tools and the threat they may pose to online safety and privacy

Vocabulary

- Text-to-speech: a type of assistive technology that reads digital text aloud. It is sometimes called read aloud technology

- Speech-to-text: Speech-to-text, also known as automatic speech recognition (ASR), converts spoken audio into text

- Speech recognition: Speech recognition is a capability that enables programs to process human speech and deliver it in written format

- Natural Language Processing (NLP): The branch of AI that gives computers the ability to understand texts and spoken words in the same way human beings can

Curriculum Links

Ontario

- Grades 4 to 6 – A2: Coding and Emerging Technologies

- A 2.1: write and execute code in investigations and when modelling concepts, with a focus on producing different types of output for a variety of purposes

- A2.2: identify and describe impacts of coding and of emerging technologies on everyday life, including skilled trades

Materials

- Phones or tablets (iOS or Android)

- Laptops/Chromebooks

- Wireless internet access

- Text of any kind, such as a story or a song

- Pens and paper

Non-Computer Activity

Task 1 – What Is Natural Language Processing?

Natural language processing (NLP) refers to the branch of computer science—and, more specifically, the branch of artificial intelligence (AI)—concerned with giving computers the ability to understand texts and spoken words in the same way human beings do. NLP combines computational linguistics—the rule-based modelling of human language—with statistical machine learning and deep learning models. Together, these technologies enable computers to process human language in the form of text or voice data and to “understand” its full meaning, complete with the speaker’s or writer’s intent and sentiment.

Let’s break down natural language processing (NLP) into five steps:

- Listening or Reading:

- What it means: Just like you listen to your friend talk or read a book, computers “read” the words you type or “listen” to the words you say.

- Example: When you ask Siri, “What’s the weather like today?”, it first has to “hear” or “read” your question.

- Breaking It Down:

- What it means: Computers split sentences into smaller parts, like words and phrases. This is like what you have learned about nouns, verbs, and adjectives in school.

- Example: In the question, “What’s the weather like today?”, computers might split it into words and concepts: “What”, “is”, “the”, “weather”, “like”, “today”, “interrogative (?)”.

- Understanding the Meaning:

- What it means: Computers have to figure out what the words and phrases mean. Sometimes, words can have more than one meaning.

- Example: The word bat can mean a flying animal or something you use in baseball. It can also be a verb or a noun. Computers use the other words in the sentence to help figure out which meaning is right.

- Thinking of an Answer:

- What it means: Once computers understand what you’re asking, they think of the best way to answer. This is just like when you think before answering a question in class.

- Example: After understanding your question about the weather, the computer might think, “I need to find today’s weather for this location.”

- Responding:

- What it means: Finally, the computer gives you an answer, either by showing it on the screen or speaking it out loud.

- Example: Siri might say, “It’s sunny and 22 degrees today.”

Activity Idea: Word Meaning Detective!

- Give students a list of words that have multiple meanings (e.g., “bat,” “bank”, “bark”).

- Ask them to think like a computer and use the other words in a sentence to figure out which meaning is being used. For instance, in “I keep my money in the bank,” the word bank refers to a place where money is kept and not the side of a river.

- Think of other word associations that, if used out of context, might be confusing for a computer. For example, the word “bat” could mean both the animal and the baseball bat. Similarly, the word “bark” could mean both a dog barking or tree bark.

The processes of NLP can be replicated in an unplugged environment by following these steps:

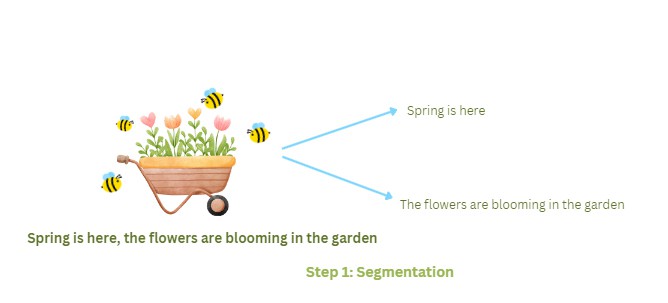

Segmentation: This is the “listening and reading” step. Break down a text into its constituent segments based on the word order, punctuation, and phrases in the text.

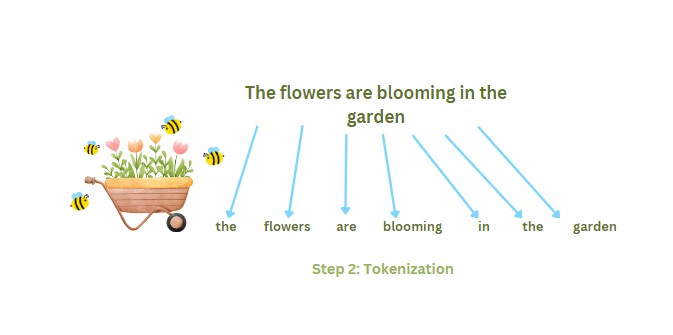

Tokenizing: For the computer to comprehend the full text, each word needs to be understood individually. Phrases are broken down into their constituent words. Each word is called a token. This process is called tokenizing. This is the “breaking it down” step.

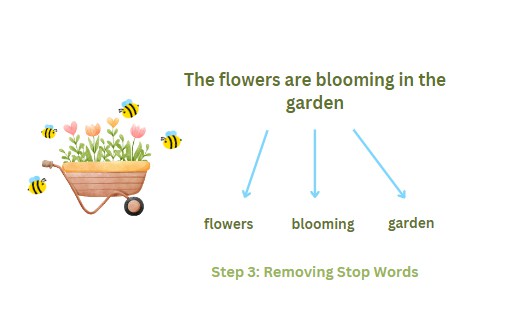

Removing stop words: The process can be sped up by removing non-essential words from the text, such as the, are, in, etc. By doing this, the machine tags only the essential words that are relevant to the text. This is the “understanding the meaning” step.

“Be” (and its various forms like “is,” “am,” “are”) is a common auxiliary verb, and in many NLP tasks, especially keyword-based tasks, it can be considered a stop word because removing it often won’t change the primary meaning or sentiment of a sentence. In the given sentence, “flowers blooming garden” still conveys the main idea of there being flowers actively blooming in a garden.

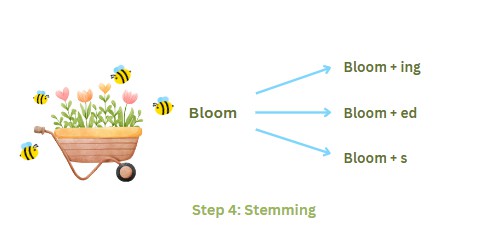

Stemming: One element of stemming is the process of adding suffixes to verbs to create more action words for the computer to understand.

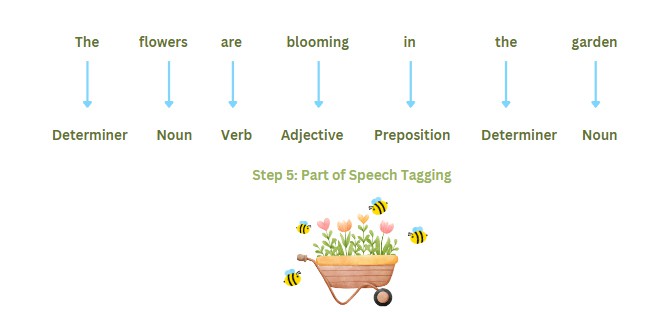

Part of Speech Tagging: The next step is to identify different parts of speech such as nouns, verbs, adjectives, prepositions, and adverbs. The machine tags the parts of texts as follows:

This is the last step of NLP, which allows the computer to make sense of the questions it is asked. It then puts together an answer based on parts-of-speech tagging.

Named Entity Tagging: This process teaches computers to identify proper nouns, such as names of famous people, monuments, cities, and movies. It can also be done by creating subcategories like “person,” “location,” “quantity,” or “organization.” For example, terms such as Canada, USA, John, Miranda, etc., are proper nouns, so the AI tags them as “Named Entity.” It’s the same as when you ask Siri, “What is Montreal’s weather today?” Siri tags Montreal as a proper noun and tells you the weather there.

Task 2 – Apply the Knowledge

Ask the students to:

- Pick a short text like a story or a song. Alternatively, the students can write their own stories.

- Apply the NLP steps outlined above to the text to break them down into parts of speech and identify them with the appropriate tags.

- Place the words under the right grammatical categories and label names accordingly. For example, all the verbs, nouns, adjectives, and proper nouns will be placed under their respective categories.

Students can also use the following text for this activity:

Teachings from the Past

Long ago, the land was kept clean so the animals would have secure migration routes. Even bones were not to be disposed of in the water. The health of the sea and its inhabitants was always a consideration. In order to ensure that the fish would thrive, the water was always to be kept clean.

Specific rules were in place for igluit (snow houses). For example, nothing was to be placed near any entrance, because that would make snow pile up at another entryway. In wintertime, igluit were oriented to face the sun. In this way, they would receive sunlight and face the northwest winds; snow does not pile up in entrances when igluit are built to face this direction. Placing igluit in this way meant they would provide good homes throughout the winter.

Areas of land used for long-stay camps were left to rest before a group would make use of them again. For example, the land had to be allowed time—usually at least a year—to get rid of the scent of humans before people returned for another stay. Bones were gathered and placed in one area before leaving the camp.

Ittuqtarniq or anijaarniq is a daily routine for young children during which they observe the weather early in the morning. This experience can provide the first steps toward becoming environmental stewards. If we fail to be keen observers, we will be less able to adapt to conditions that are always changing.

Source: Inuit Principles of Conservation: Serving Others, Shirley Tagalik. The full lesson plan can be found on our website.

Computer Activity

Task 3 – Teachable Machines

In this lesson, students experiment with teachable machines and train a computer to identify different animal and bird sounds by creating an Audio Project.

Follow the instructions below:

- Go to Teachable Machine and click the Get Started button. Choose Audio Project.

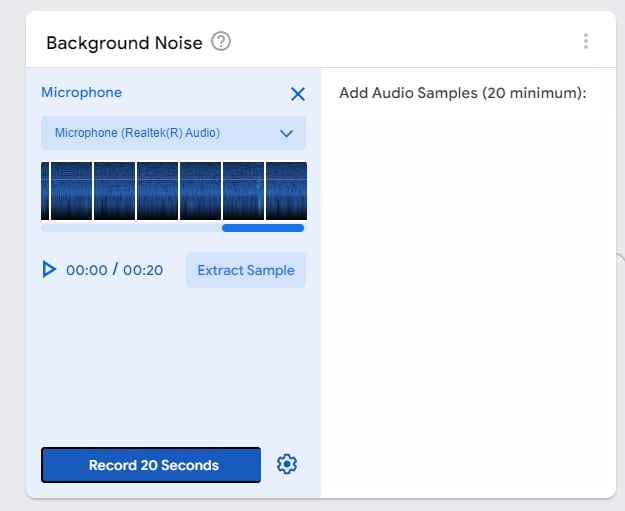

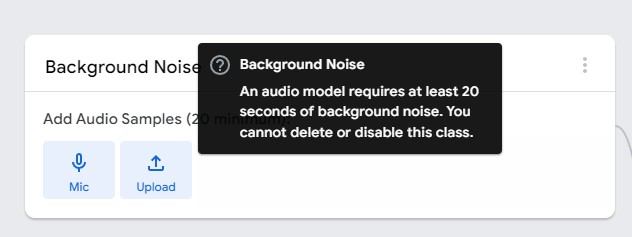

- Once in the project, upload background noise into the system. The background audio must be at least 20 seconds long. This will allow the computer to distinguish the noise in the background around students from the significant elements of other media files.

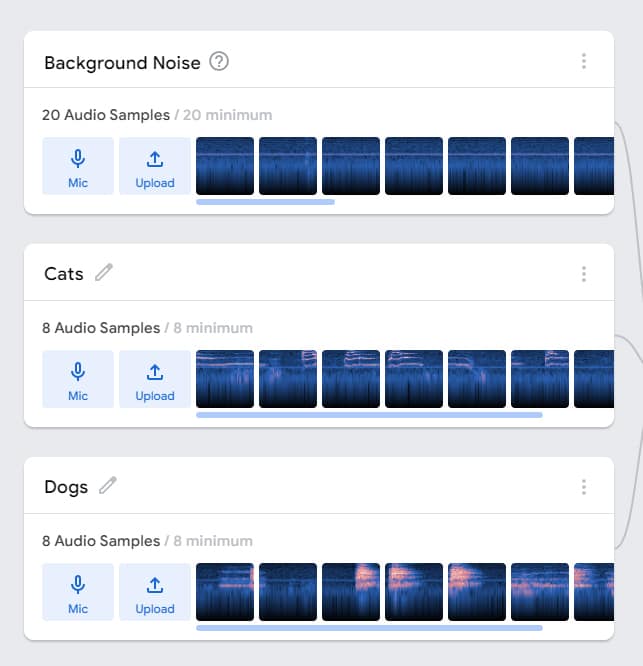

- Once the background noise is uploaded, it’s time to upload various animal sounds (dogs and cats). Our goal is to help the computer distinguish between dog sounds and cat sounds.

- Use mobiles or smart devices to search for different dog and cat sounds and use the computer’s microphone to record them in the machine. There should be a minimum of eight data samples for each type, cats and dogs. Once all the samples are uploaded, the resulting program should look like this:

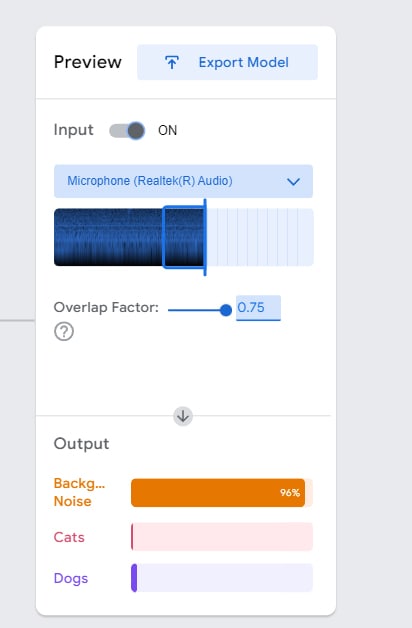

- Now, click Train Model and allow the machine to process the data. This might take a few minutes.

- Once the machine model is trained, play different animal sounds to the computer and see whether it can identify them. Check the Output tab, to see what the model predicts.

Note: that during the time this lesson was written, there were no animals present, therefore the model registered background noise at 96%. Students can play cat and dog sounds on their smart devices for the machine learning model to make a prediction.

Conclusion

Now that students clearly understand natural language processing and how machines understand human language, it is time to explore the implications of voice-generated AI on everyday life. Ask students:

- How can AI be useful to online safety and privacy? How might it be a threat?

- What experiences have students had with voice-generated AI programs?

- When interacting with voice-generated AI, what should we do to ensure our safety and privacy?

- What are some of the fields where AI might prove useful and how?